In 1978, Thomas Barefoot was convicted of killing a police officer in Texas. During the sentencing phase of his trial, the prosecution called two psychiatrists to testify about Barefoot’s “future dangerousness,” a capital-sentencing requirement that asked the jury to determine if the defendant posed a threat to society.

The psychiatrists declared Barefoot a “criminal psychopath,” and warned that whether he was inside or outside a prison, there was a “one hundred percent and absolute chance” that he would commit future acts of violence that would “constitute a continuing threat to society.” Informed by these clinical predictions, the jury sentenced Barefoot to death.

Can neuroscience predict how likely someone is to commit another crime?

Although such psychiatric forecasting is less common now in capital cases, a battery of risk assessment tools has since been developed that aims to help courts determine appropriate sentencing, probation and parole. Many of these risk assessments use algorithms to weigh personal, psychological, historical and environmental factors to make predictions of future behavior. But it is an imperfect science, beset by accusations of racial bias and false positives.

Now a group of neuroscientists at the University of New Mexico propose to use brain imaging technology to improve risk assessments. Kent Kiehl, a professor of psychology, neuroscience and the law at the University of New Mexico, said that by measuring brain structure and activity they might better predict the probability an individual will offend again.

Neuroprediction, as it has been dubbed, evokes uneasy memories of a time when phrenologists used body proportions to make pronouncements about a person’s intelligence, virtue, and — in its most extreme iteration — racial inferiority.

Yet predicting likely human behavior based on algorithms is a fact of modern life, and not just in the criminal justice system. After all, what is Facebook if not an algorithm for calculating what we will like, what we will do, and who we are?

In a recent study, Kiehl and his team set out to discover whether brain age — an index of the volume and density of gray matter in the brain — could help predict rearrest.

Age is a key factor of standard risk assessments. On average, defendants between 18 to 25 years olds are considered more likely to engage in risky behavior than their older counterparts. Even so, chronological age, wrote the researchers, may not be an accurate measure of risk.

The advantage of brain age over chronological age is its specificity. It accounts for “individual differences” in brain structure and activity over time, which have an impact on decision-making and risk-taking.

After analyzing the brain scans of 1,332 New Mexico and Wisconsin men and boys — ages 12 to 65 — in state prisons and juvenile facilities, the team found that by combining brain age and activity with psychological measures, such as impulse control and substance dependence, they could accurately predict rearrest in most cases.

The brain age experiment built on the findings from research Kiehl had conducted in 2013, which demonstrated that low activity in a brain region partially responsible for inhibition seemed more predictive of rearrest than the behavioral and personality factors used in risk assessments.

“This is the largest brain age study of its kind,” said Kiehl, and the first time that brain age was shown to be useful in the prediction of future antisocial behavior.

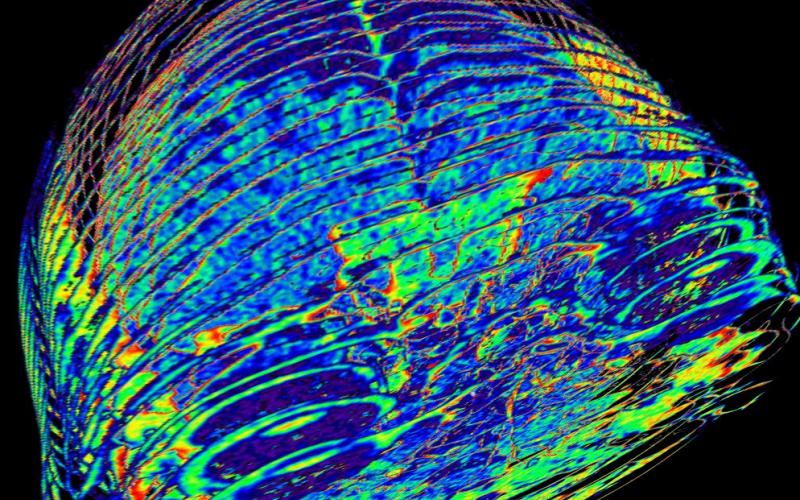

In the study, subjects lie inside an MRI scanner as a computer sketches the peaks and troughs of their brains to construct a profile. With hundreds of brain profiles, the researchers can train algorithms to look for unique patterns.

The algorithms, trained on these brain scans, run tens of thousands of patterns looking for a configuration that successfully identifies a fact about the subject — say, the age of the individual.

The algorithm is then used to test an entirely different population with known rearrest records and asked to calculate the likelihood that they were rearrested.

The researchers showed that reduced gray matter is closely connected to recidivism, confirming that younger brains are at higher risk of reoffense.

The team also tried to pinpoint specific parts of the brain that might influence criminal behavior. They zeroed in on irregularities in areas of the brain associated with empathy, moral decision-making, emotional processing and the interaction between positive and negative reinforcement in guiding actions. “These inferred limitations,” wrote Kiehl, “might contribute to poor decision-making and poor outcomes.” In other words, crime.

In an interview, Kiehl said that “brain imaging can’t tell you with 100 percent accuracy what an offender will do,” and added that, “neither can a pen-and-paper risk assessment.”

Over the past two decades, brain scans and other neuroscientific evidence have become commonplace in courtrooms. So much so that a defendant can file an “ineffective assistance of counsel” claim if his or her lawyer fails to introduce relevant brain tests. And defense lawyers ordinarily submit brain imaging to bolster claims of their clients’ incompetency or insanity.

Still some legal scholars and attorneys decry the growing presence of neuroscience in courtrooms, calling it a “double-edged sword” that either unduly exonerates defendants or marks them as irredeemable future dangers.

“But that’s not right,” said Deborah Denno, a professor and director of the Neuroscience and Law Center at Fordham University Law School, who conducted an analysis of every criminal case that used neuroscientific evidence from 1992 to 2012. Her analysis showed that brain evidence is typically introduced to aid fact-finding with more “complete, reliable, and precise information.” She also showed that it is rarely used to support arguments of future dangerousness.

To date, neuroprediction has not been admitted into the courtroom or parole hearings. Some scholars, like Thomas Nadelhoffer, a fellow at the Kenan Institute for Ethics at Duke University, who popularized the term neuroprediction, argue that the science is reliable enough to integrate with other risk assessments.

The main obstacle, Nadelhoffer said, is reluctant defense attorneys who fear branding their clients as biologically predisposed toward crime. “Whereas I see it as a mitigating factor,” he said, a jury might not — reasoning that if the problem is in your brain, “Well, then we better lock you up.”

Coppola proposes using a defendant’s brain profile not to mete out punishment, but rather to design alternative sentences based on social and emotional training.

Not all experts agree on the state of the art, however. “There is much research afoot, but the science of neuroprediction is not ready for prime time,” said Francis X. Shen, a senior fellow in law and neuroscience at the Harvard Center for Law, Brain, and Behavior. He said that the current predictive value of biological indicators is overstated.

Even if neuroprediction were accurate enough to be accepted in a courtroom, some question whether judges are properly trained to interpret scientific and statistical evidence. Judge Morris Hoffman, who sits in Colorado’s 2nd Judicial District and was among the first members of the MacArthur Foundation Research Network on Law & Neuroscience, says that tools like neuroprediction could be a “hazard” in the hands of untrained judges. “We judges can become over-reliant on statistical tools because they are shiny and new,” he said.

Federica Coppola, a presidential scholar in society and neuroscience at Columbia University, contends that neuropredictions of future behavior could pose a threat to civil liberties and privacy.

Coppola proposes using a defendant’s brain profile not to mete out punishment, but rather to design alternative sentences based on social and emotional training.

“Especially for young offenders, we can encourage growth in brain areas linked to skills like empathy or self-control,” she writes. Possibly increasing their chances of staying out of prison.

About the Author

Andrew Calderon is a data reporting fellow at The Marshall Project. A graduate of the Columbia School of Journalism, he uses programming and data analysis tools to report on the intersection of the environment, science, and the criminal justice system.

This story originally appeared at The Marshall Project, an independent nonprofit news organization covering the U.S. criminal justice system. Sign up for their newsletter, or follow The Marshall Project on Facebook or Twitter.

Image Credit: Dale Mahalko